One of the major stories of recent elections has been the failure of polls to detect the full extent of support for Republican candidates. One factor in this likely stems from the refusal of many of their supporters to respond to voter surveys.

Just as many people decline to respond to political pollsters, people are increasingly refusing to respond to the various surveys the government’s statistical agencies field to gather data about people’s work, income, and spending patterns. The Current Population Survey (CPS), the main source for information on employment, unemployment, health care coverage, and income has seen a substantial decline in response rates over the last four decades. As of last year, the coverage rate was just over 85 percent, meaning that the CPS did not get responses from almost 15 percent of the households that were targeted for the survey.

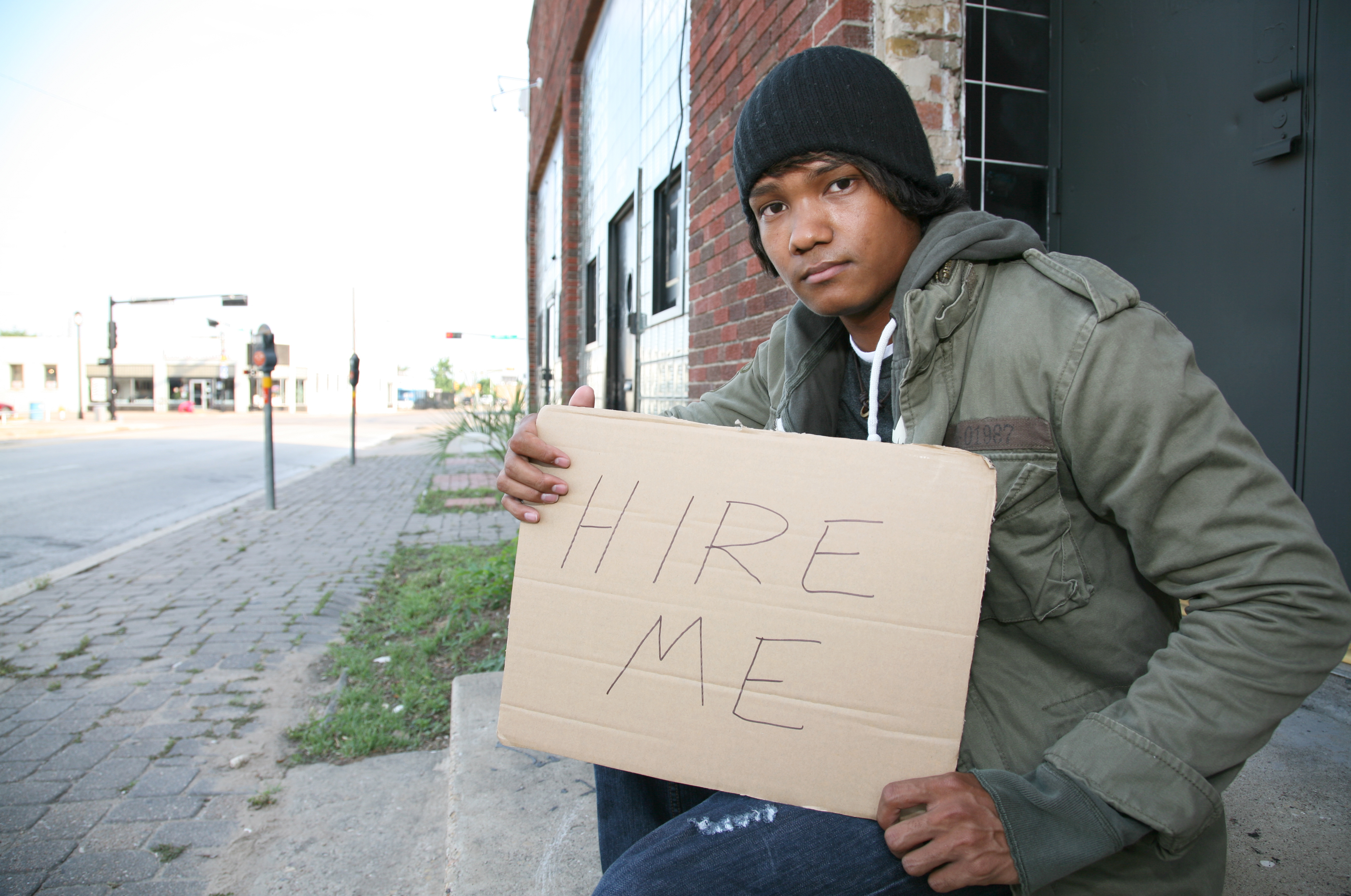

The rates of non-response differ substantially by demographic group. Older people and white people tend to respond at much higher rates than the young and people of color. The lowest response rate is for young Black men, where the coverage rate is less than 70 percent.

The drop in response rates would not be a big deal if the people who did not respond were like the people who did respond. This is effectively what the Bureau of Labor Statistics (BLS) assumes with its current procedures. Controlling for age, gender, race, education, and a number of other factors, BLS assumes that the people who they were not able to survey were just like the people who they did survey.

For purposes of the employment and unemployment statistics, they assume that a young Black man with a high school degree, who they did not survey, was equally likely to be employed/unemployed as a young Black man with a high school degree who they did survey. As we saw with the last two presidential elections, this may not be accurate.

We sought to test the accuracy of the BLS methodology by taking advantage of the longitudinal features of the CPS. The same person is in the survey for four consecutive months. This means that we can examine how people transition between employment, unemployment, and non-employment (people not working, but also not looking for work), over this four-month period. We can then compare how these transitions compare for the people we do observe over the four-month period, to the employment status the BLS imputes for people who are missing from the survey.

To take a hypothetical example, suppose that someone who is unemployed in one month has a 50 percent probability of being unemployed in the second month. By contrast, say that a person who is employed in the first month has only a 10 percent probability of being unemployed in the second month.

If half the people who are missing in the second month had been unemployed in the first month and half had been employed, then we could infer that the unemployment rate for the people we missed in that month was 30 percent (half of 50 percent, plus half of 10 percent), if people who were missing from the survey followed the same transition process as the people who were included in the survey.

This was the basic approach that we pursued in our analysis. We effectively assumed that the transitions for people who were missing from the survey, given their prior employment status, were the same as for the people who were included in the survey. (We also used a somewhat more complex imputation process, where we took advantage of later transitions where people re-entered the survey.) We also assumed that the people who were never surveyed, in any of the four periods, were as likely to be unemployed as the people who missed one or more interviews.

Since people who were unemployed were in fact more likely to be missing in subsequent periods, and since the unemployed were also more likely to be unemployed in a subsequent period, this methodology led to an adjusted unemployment rate that is substantially higher than the BLS measure. For the population as a whole, our methodology led an unemployment rate that is on average 0.7 percentage points higher than the BLS measure for the years 2003 to 2019.

The gap for Black people and young Black men is considerably larger since their coverage rates are much lower than for white households. Our adjusted unemployment rate for Black workers is on average 2.6 percentage points higher than the BLS measure. For Black men between the ages of 16 and 24 the adjusted difference is 3.6 percentage points and for Black men between the ages of 25 and 34 the gap is 3.0 percentage points. The unemployment rate for Black women is understated by about 2.4 percentage points. This methodology led to an increase in the unemployment rate for Hispanics of 1.5 percentage points.

As can be seen, this treatment of missing observations would lead to a substantially different picture of the labor market. Most importantly, it implies that the gap between labor market outcomes for Blacks and Hispanics, and the outcomes for whites, is even larger than the BLS data indicate.

To be clear, we cannot know that our imputation is correct. It is possible that the transitions for people missing from the survey are different than those for people who remain in the survey. However, our analysis does show the importance of BLS’s assumption that the people who they don’t talk to are just like the ones they do talk to.

If our assumption about transitions is correct, then the missing are substantially more likely to be unemployed than the people surveyed, just as the people who the pollsters did not talk to were substantially more likely to be Trump voters than the people who they did talk to.